Fine-tuning large language models (LLMs) allows you to customize pre-trained models for specific tasks without starting from scratch. This process is cost-effective, improves task accuracy, and enables faster deployment of AI solutions. Here's what you need to know:

Fine-tuning bridges the gap between general-purpose AI and task-specific performance. Whether you're in healthcare, finance, or customer support, this guide provides actionable steps to optimize your models effectively.

Fine-tuning large language models (LLMs) can be approached in a few different ways, depending on your goals. The main categories are feature-based, parameter-based, and adapter-based fine-tuning [6].

| Method Type | Description | Example Use Case |

|---|---|---|

| Feature-based | Leverages model embeddings without altering parameters | Using BERT embeddings for sentiment analysis |

| Parameter-based | Modifies the model's internal parameters | Training GPT-3 on company-specific data for chatbots |

| Adapter-based | Trains small modules within the pre-trained model | Cost-effective solutions for enterprise applications |

Once you've decided on your approach, the next step is to ensure your data is prepared to deliver the best possible results.

The quality of your data plays a huge role in how well your fine-tuned model performs. The process includes gathering domain-specific data, cleaning it to remove inconsistencies, and formatting it to meet the model's input requirements [6] [2]. These steps directly influence how effectively the model learns.

Key steps for data preparation:

Setting up your model involves choosing the right base model and fine-tuning parameters. This step lays the groundwork for successful training.

Model Selection and Setup:

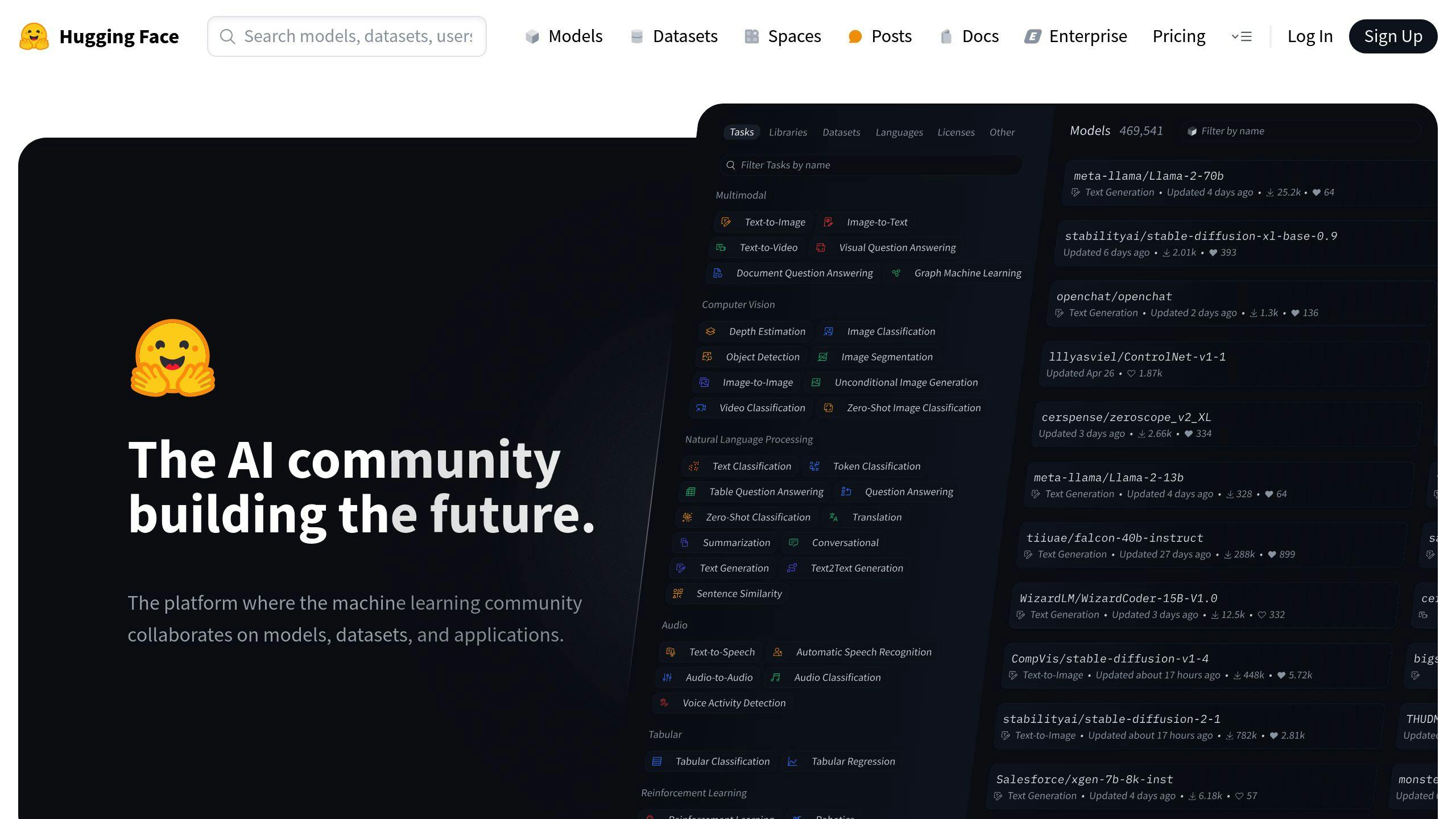

For a smoother process, tools like Hugging Face's Transformers library offer pre-built APIs and a wide range of models to get you started [2].

With your model and data ready, you can start exploring advanced fine-tuning strategies to push performance even further.

Building on basic methods like parameter-based fine-tuning, advanced strategies aim to improve efficiency and tailor models for specific needs.

Techniques like LoRA focus on reducing computational requirements by updating only a small portion of parameters. This approach can lower memory and compute usage by up to 90% [5][7]. It also makes fine-tuning feasible on standard hardware, such as when using Hugging Face's Transformers library for models like BERT or T5 [2].

Architectures such as Mixture of Experts (MoE) and memory-augmented networks are designed for specialized tasks. For example, JPMorgan Chase utilized Mixture of Experts to fine-tune their models for analyzing legal documents, achieving higher precision [3].

| Architecture Type | Key Benefits | Best Use Cases |

|---|---|---|

| Mixture of Experts | Focused processing for specific tasks | Legal document analysis, Multi-domain tasks |

| Memory-augmented Networks | Improved ability to retain information | Long-form content, Complex reasoning |

| Hybrid Architectures | Combines strengths of multiple approaches | Enterprise-scale applications |

Refining model outputs can be achieved with optimization approaches like Proximal Policy Optimization (PPO) and Direct Preference Optimization (DPO) [1][4]. These methods help align models with specific goals while maintaining stable training.

Key points to consider when using these strategies include:

For instance, Nuance's Dragon Medical One incorporates these optimization techniques to accurately transcribe and organize patient notes [3].

These advanced approaches are essential for streamlining fine-tuning processes and achieving better results in specialized applications.

Choosing the right tools and platforms can make a big difference in how effectively you fine-tune models to meet your business needs.

OpenAI's API simplifies the fine-tuning process, making it easy to get started. You upload a JSON dataset with input-output pairs, set parameters like model type, batch size, and learning rate, and monitor progress with real-time metrics such as training loss and validation performance.

Hugging Face provides a well-rounded platform for fine-tuning models, offering tools and resources like:

| Feature | What It Offers |

|---|---|

| Model and Dataset Libraries | Access to pre-trained models and tools for managing large datasets |

| Training Infrastructure | Automated pipelines for scaling up to production-level development |

For example, JPMorgan Chase used Hugging Face's infrastructure to analyze legal documents, improving the accuracy of contract reviews [3].

Artech Digital delivers tailored fine-tuning services for various industries, broken into three tiers:

They have expertise in healthcare, optimizing processes like clinical documentation workflows.

When deciding on a platform, think about factors like infrastructure (OpenAI's managed approach vs. Hugging Face's self-hosted flexibility), budget, technical expertise, and data privacy requirements.

After choosing a platform, the next step is deploying and maintaining your fine-tuned model to keep it performing well over time.

Choosing the right deployment strategy plays a big role in the success of your fine-tuned LLM. Each option has its own advantages and trade-offs when it comes to control, scalability, and cost.

| Deployment Option | Key Benefits | Considerations |

|---|---|---|

| Cloud-based Platforms | Scales automatically, minimal setup needed | Can get expensive over time, less flexible |

| API Integrations | Quick to implement, regular updates | Limited customization, usage-based pricing |

| On-premise Solutions | Full control, enhanced security | Higher upfront costs, ongoing maintenance |

Once your model is deployed, keeping it accurate and performing well is essential for long-term success.

Regularly retraining your model with domain-specific data helps ensure it stays accurate over time.

Focus on tracking these key performance metrics:

Efficient scaling is crucial to handle growing demands while keeping expenses in check. Techniques like LoRA and QLoRA are especially helpful for cost-effective scaling [5].

"Fine-tuning can significantly enhance model performance on specific tasks, while techniques like LoRA and QLoRA reduce memory and compute requirements, making fine-tuning accessible on less powerful hardware" [5].

For large-scale deployments, consider these strategies:

| Strategy | Implementation | Impact |

|---|---|---|

| Incremental Learning | Update with new data | Keeps accuracy high without full retraining |

| Resource Optimization | Use efficient training methods | Lowers computational costs |

| Load Balancing | Spread processing across servers | Enhances reliability and response times |

Many enterprises use a mix of cloud resources and dedicated infrastructure to handle sensitive data securely while meeting performance needs.

When scaling, focus on three key areas:

Fine-tuning large language models (LLMs) helps businesses customize AI for specific tasks while cutting costs through methods like LoRA and QLoRA. Success stories from various industries highlight how tailored AI solutions can address unique challenges and deliver precise results [5].

| Aspect | Key Impact |

|---|---|

| Industry Adaptation | Better handling of specialized terminology for more accurate outputs |

| Resource Efficiency | Lower computational demands with LoRA/QLoRA, reducing overall expenses |

| Performance | Higher accuracy in domain-specific tasks, supporting better decisions |

Examples like JPMorgan's legal document analysis and Nuance's medical transcription show how fine-tuned LLMs are reshaping industries [3]. These real-world applications underline the practical benefits of fine-tuning in fields like finance and healthcare.

With these advantages, businesses can take meaningful steps to integrate fine-tuning into their workflows.

While fine-tuning LLMs might seem challenging, platforms like Hugging Face and OpenAI have made the process more straightforward [2]. With the right approach, companies can efficiently implement fine-tuned solutions that deliver impactful results.

To get started:

The future of fine-tuning holds even more promise, with ongoing advancements making it easier and more efficient. By adopting these strategies, businesses can craft AI solutions that are not only effective but also tailored to their distinct needs [7].

Here are answers to some common questions about fine-tuning large language models (LLMs), building on the techniques and tools covered earlier.

Yes, you can fine-tune an LLM to make it more suitable for specific tasks, such as generating customized responses or working with specialized data. This process involves tailoring pre-trained models to handle unique language patterns and terminologies [1].

There are several approaches to fine-tuning LLMs, each serving different purposes and resource levels:

| Method Type | Description | Best Use Case |

|---|---|---|

| Feature Extraction | Focuses on specific model features | Quick domain adjustments |

| Full Fine-tuning | Modifies all model parameters | Full-scale customization |

| Supervised Fine-tuning | Uses labeled datasets | Task-specific optimization |

| Unsupervised Fine-tuning | Leverages unlabeled text data | Broader domain adaptation |

Techniques like LoRA and QLoRA help reduce computational demands, making fine-tuning more efficient while maintaining strong performance [5].

To fine-tune effectively, focus on resource optimization and performance by following these steps:

These FAQs offer a starting point for understanding fine-tuning and applying it to specific industry needs.